Say hello to Google Gemini models... or rather prepare for it

This is probably another day the whole industry was waiting for. Google has just released presented a new model

called Gemini

. After the PaLM 2 releases

(with Gecko, Otter, Bison, and Unicorn models) in May 2023, Gemini was announced as the breakthrough in the field of

generative AI (at least for Google in the global competition with OpenAI, Facebook, and the others).

Yay! Another model that will change the world! But what is it actually?

Is it the AGI ?

No, it is not.

Is it the GPT4 model successor or the ChatGTP killer?

Maybe. Let’s have a look.

So what actually is Gemini?

From the official Google AI Blog we can read that Gemini is a new Large Language Model that can generate & process text, image, audio, and video (multi-modal).

This is the most capable AI model in Google’s portfolio, and it will replace the PaLM 2 and Lambda models. And comes in three versions (different sizes):

- Nano - embedded on devices (e.g. smartphones)

- Pro - cloud hosted API -> as the general purpose model (as part of the Google Bard, currently available only in the US)

- Ultra - for the most demanding tasks

- this one is described as an actual breakthrough in the market

What are actual capabilities of Gemini?

From the short hands-on video presentation, we can learn that Gemini is a kind of “anything - to anything” and it extends well-known GPT4 capabilities with some new features. It can:

- recognize what is going on in the video (e.g. following the drawing example, recognize gestures as the rock paper scissor game, recognize planets and their correct order, based on simple sketches, track humans in a CCTV example, find the ball under the cup example, etc.)

- generate images as a response to the prompts that include text and/or images (e.g. generate the idea of what to build based on the visible materials)

- produce the music based on the text/image prompt (e.g. improving the layer of the music, adding new instruments, etc.)

- reason and discover some logic based on the text/image prompt (e.g. which car drafted by the user is the fastest)

- and many more…

…all done in real-time. Really mind-blowing.

BONUS: The AlphaCode2 - this is not about the Gemini, but it is worth mentioning that the AlphaCode2 (the project with the Gemini under the hood) is presented as the most advanced AI coding solution in the world (beating ~85-90% of the human coders in the competitive coding challenges).

Sounds like a breakthrough, right? Absolutely!

However, there are some concerns about it.

Controversies

After the huge enthusiasm, there was a huge disappointment and some commentators already called it a fake demo or even Google lies.

What are the concerns?

The presentation. It turned out that the Gemini was not as perfect as it was presented.

The demo was prepared in a “controlled environment” (as most demos…?) so most of the allegations are related to the:

- real-time video analysis - in fact, there was no real-time analysis of the video - they used image snapshots and textual prompts to generate responses, so the video is more like a hypothetical illustration of the Gemini capabilities

- real-time music generation - in fact, there were some pre-generated music samples used to generate responses

- the time needed to generate the response - the actual responses were not as quick as were presented in the demo and took dozens of seconds

What is interesting, this is not the first time Google has been accused of fake demos in the AI field (Duplex demo from 2018).

The real disappointment - the Gemini Pro vs GPT4 in benchmarks

The hands-on controversies will definitely have an impact on the overall market share and might slow down the expansion and competition with the OpenAI GTP4 products, but leave these “presentation tricks” for a second.

Who haven’t done the fake demo in the controlled environment please raise your hand… 😂

We might think now - OK, the Gemini is not that mind-blowing-super-multi-model

a current ChatGPT thought. As a matter of fact, from the scientific perspective we should care more how it competes in

the benchmarks, right?

Right, but there is another catch here.

The Gemini Technical Report

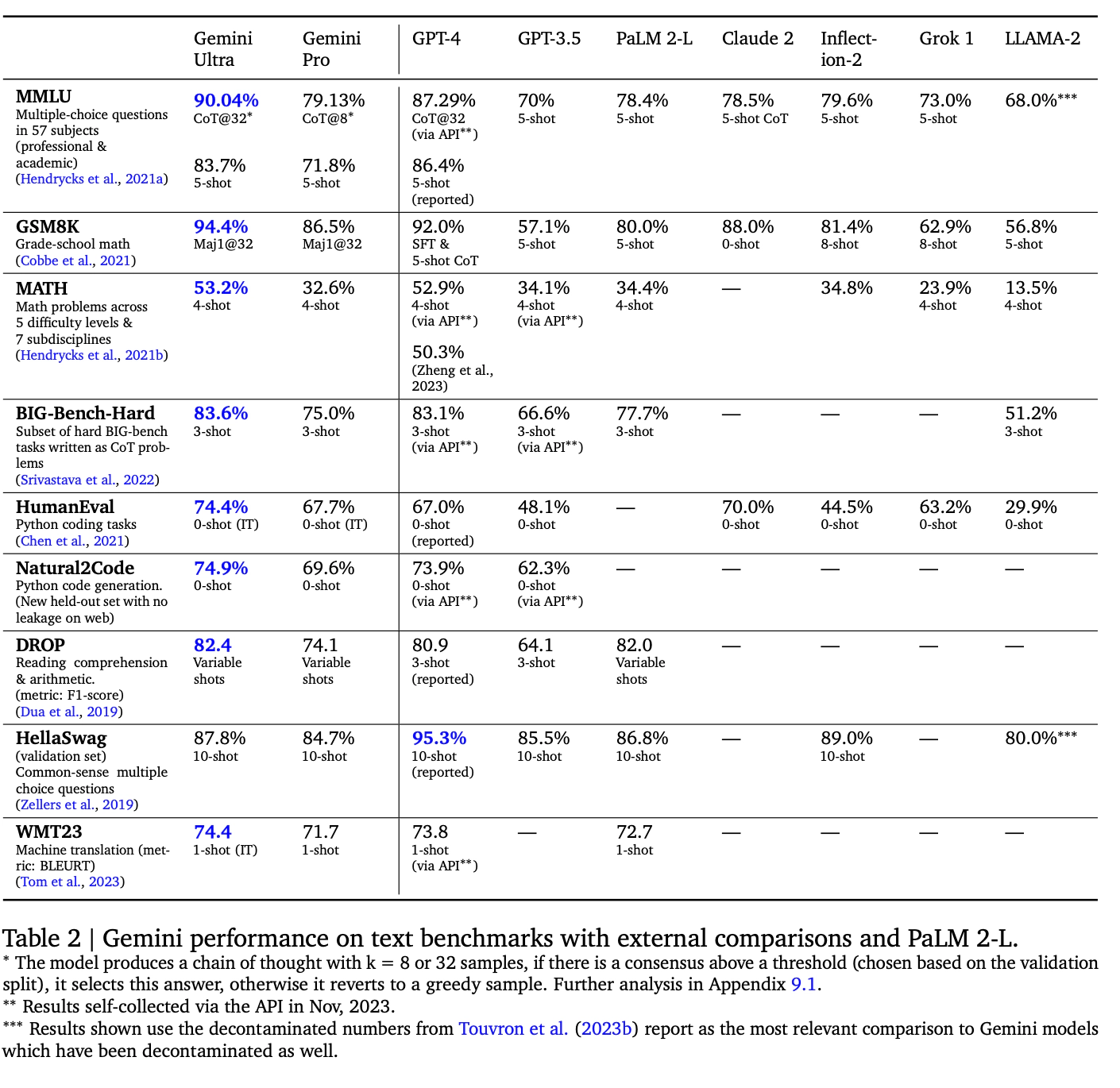

presented in the official Google AI Blog brings some benchmarks and comparisons with the GPT4, LLaMA-2, Claude2, and Grok-1 models.

These results are not as impressive as the hands-on examples, but still, they are quite good.

However, as the community revealed, in the Gemini evaluation there were used different prompt engineering techniques

(Chain Of Thoughts, which is generally more efficient… for all LLMs) and after that, they did not compare it with

the same GPT4 approach (where the few-shot approach was used).

Also, the fact that the Gemini Pro under-performs GPT4 for most of the benchmarks and only the Ultra version outperforms GPT4 in most of the categories is a bit disappointing.

But let’s give it a chance…

This is all good - we can always check it ourselves, right? Not that fast. The Gemini is not fully available for public use yet.

When will Gemini be available?

Taking the fact that we “have access” (from the US) only to the Gemini Pro as a part of the Google Bard, and we are not able to play the Ultra version to evaluate its full potential makes it more like a marketing promise as for now.

However, the Gemini Ultra will be available on Bard Advanced in January 2024 - so this is not that far away.

How about the privacy?

…

How about tips for the better answers ? :)

I guess we need to wait for the wider release to see how it will work in the real world.